Seeing Isn’t Believing – Tackling AI, Misinformation and Its Impact on an Election Year

As technology evolves, so does our digital landscape. Many of us have witnessed this transformation firsthand; we can still remember the sound of dial-up internet and are also part of today's "always-on" culture. Most of the world is constantly connected, even expecting instant responses via text, DM or Microsoft Teams. However, increased connectivity comes with its own challenges. Scams, once confined to traditional, more obvious methods, have adapted to leverage some of the latest technology for more sophisticated and clever schemes unlike ever before.

When it comes to election security, the first thought often centers around the risk of hacking voting systems or machines. Artificial intelligence (AI) technology makes it easier than ever to manipulate public opinion without needing to breach highly secure systems. As AI continues to advance, its potential to affect nearly every facet of our daily lives—including news, careers, trends, social media and elections—cannot be underestimated.

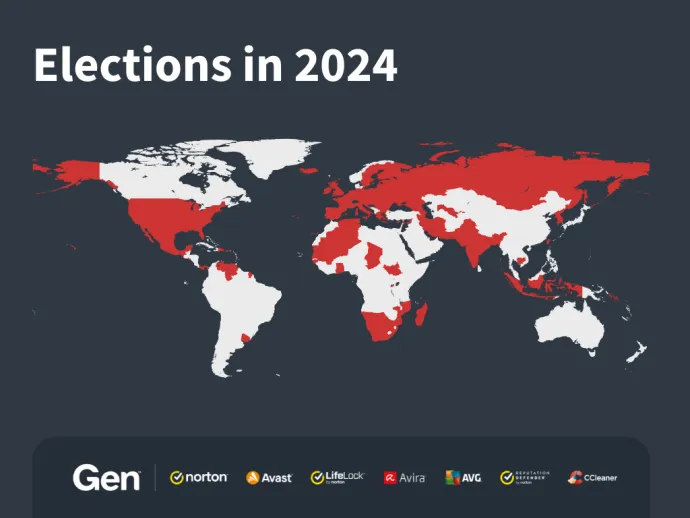

A Global Outlook

2024 has deemed itself the ultimate election year with 64 countries plus the European Union all headed to the polls this year. That means there’s even more at stake for the vast majority of people who can and will participate in free and fair elections in their country.

We’ve already seen an FBI report on state-sponsored media leveraging software to manipulate public opinion through social networks.

The Influence of AI

AI seems to touch every aspect of our digital existence today and is spreading through personalized advertising algorithms and even for our personal content curation. (Admit, you can hardly scroll on LinkedIn without an ad or a tip on how to use AI.)

One deepfake video features a journalist alongside candidates from six different Spanish political parties. Although this video is intended for fun, as indicated by its hashtags, it highlights both the potential and believability of AI technology.

However, AI's impact extends beyond convenience and efficiency; it also poses significant risks. For instance, AI algorithms are already being utilized to manipulate elections by spreading misinformation and propaganda; be it through automated bots, curated images or deepfake videos. We see it on social media constantly. And the real problem is determining real versus fake. With such convincing technology, AI has the potential to erode public trust and undermine democratic processes.

Proactive Measures to Combat Misinformation

As we approach another election year, the issue of AI misinformation, particularly deepfakes, has become a pressing concern. Although the U.S. federal government has yet to take action against deepfakes, there are states that are taking measures into their own hands. One U.S. state, Colorado, has proactively implemented measures to combat the spread of these deceptive technologies. The state now mandates that campaign ads featuring audio, video, and other AI-generated content include prominent disclosures.

The law treats deepfakes — fake videos or images created by AI showing people doing or saying things they didn't actually do — as if the people were being forced to act against their will. It highlights the risk of candidates’ reputations being "irreparably tainted" by fabricated messages and requires "clear and conspicuous" disclosures when AI-generated content is used to portray candidates within 60 days of a primary election or 90 days of a general election.

The new law empowers individuals to file administrative complaints with the Secretary of State’s Office for election law violations and gives candidates the ability to sue over the dissemination of deepfakes. Colorado’s proactive stance sets a precedent for safeguarding the integrity of the electoral process and protecting voters from being misled by manipulated content, ensuring a fair and transparent election.

Elections Under Siege

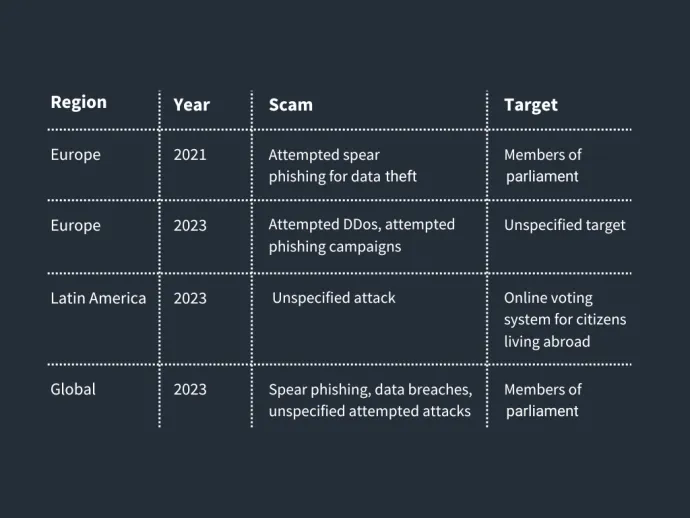

How would AI impact our elections? Well, consider this: elections have already faced cyberattacks in the past through digital channels.

Historical data, including analyses of past election years and telemetry from significant events, show the severity of the issue and emphasize the need for proactive measures to safeguard electoral integrity.

We don’t have to look too far to find evidence. Case in point: the Polish Parliamentary Elections of 2023. Just two days before the elections, Polish media outlets released a video depicting a police intervention at one of the three polling stations in Poland. The intervention was prompted by an anonymous bomb threat received before the scheduled voting day. However, accounts associated with the Russian FIMI infosphere quickly seized upon this video, reframing its context to suggest that explosions had already taken place at the polling station. The incident exemplifies a calculated effort to stoke fear surrounding the purported bomb threats directed at polling stations, potentially discouraging voter turnout.

Recently, an AI-generated version of Indian Prime Minister Narendra Modi was spread on WhatsApp.

In the United Kingdom, the state of British healthcare and the National Health Service was a hot topic during the pre-election period. The urgency of this issue has been exploited by cybercriminals, leading to a 208% increase in URL-based election attacks in 2024 compared to 2023. These attackers have primarily targeted healthcare topics to deceive and manipulate voters.

Similarly, in Germany, we have observed an alarming increase in blocked attacks on URLs with politically controversial content. These attacks occurred in two significant waves: the first at the turn of the year and the second from April up to the election week. From the beginning of the pre-election period in December 2023 to the end of May 2024, the monthly number of blocked attacks surged by 233%. When comparing the entire pre-election period of the last six months to the same months of the previous year, there is an astounding year-on-year increase of nearly 5000% in blocked attacks. These attacks, originating from politically charged websites, include malicious malvertising, phishing, and push notifications designed to install malware or steal sensitive personal data.

The 2024 European elections have seen a dramatic rise in politically based cyberattacks on German users, with an increase of almost 5000%. Cybercriminals have employed various tactics, including fake ads, phishing, and dangerous push notifications, to exploit politically charged content. Keywords and names were frequently used as clickbait to lure victims. This significant surge in political-based attacks underscores the need for robust cybersecurity measures to protect the integrity of the electoral process and ensure a safe digital environment for voters.

Below are a few examples of elections that have faced some form of cyberattack:

Types of Election Scams

A myriad of election scams exists, ranging from seemingly innocuous political donation solicitations to sophisticated phishing attempts targeting voter registration databases. AI-driven scams exacerbate the threat landscape, as malicious actors leverage advanced algorithms to create videos, text and even email scams designed to sway public opinion and manipulate electoral outcomes. But AI isn’t the only threat to elections.

Potential threats to elections:

- Tampering with registrations, votes

- Committing voter registration fraud

- Hacking candidate laptops, email, social accounts

- Hacking of campaign websites

- Leaking of confidential info

- Hacking government servers, comms networks,

- Spreading misinformation on the election process, parties, candidates or results

- Altering results

- Hacking internal systems used by media or press

Here's what scams could look like to voters:

- Political donation scams

- Fake survey or polls

- Voter registration phishing

- Scam texts

- Social media posts

- Fake data leaks

- Misinformation campaigns

Spotting the Scams

As scams become increasingly sophisticated, it is important to equip individuals with the necessary tools and knowledge to identify and mitigate any possible threat.

Looking Towards the Future

Emerging technologies, such as deepfakes, present both opportunities and risks. (Like all technology, it’s good when put in responsible hands.) Though these tools can be exploited maliciously, they also hold the potential for positive applications.

Here, we delineate both the benefits and risks stemming from AI involvement in the electoral process.

Benefits of AI include:

- Enhanced security: AI presents an opportunity to reinforce cybersecurity measures.

- Voter engagement: Leveraging AI-driven chatbots and virtual assistants can foster enhanced voter engagement by facilitating communication, ensuring broader accessibility.

- Data analysis: AI algorithms offer the capability to sift through vast datasets, uncovering valuable insights into voter trends, preferences, and potential irregularities.

Technological risks associated with AI include:

- Security vulnerabilities: AI systems, datasets, and models are not immune to cyberattacks.

- Bias and Equity Concerns: AI systems have the potential to inherit biases from their training data, potentially leading to unfair or discriminatory outcomes in voter registration, candidate selection, or data analysis processes.

- AI-driven cyberattacks: AI-powered data mining allows for targeted and sophisticated social engineering attacks, enabling threat actors to identify potential targets and vulnerabilities more effectively.

- Manipulation of AI-generated Content: Synthetic media, such as AI-generated images, videos, and text, can be weaponized to spread disinformation or manipulate public opinion.

AI has become so widespread and accessible, that it is certainly a power that must be kept in the rightful hands and with a watchful eye.

Our Commitment

Our commitment to the ethical use of technology and our mission of powering digital freedom has led us to sign the tech accord "AI for Good." By aligning ourselves with this initiative, we emphasize our dedication to leveraging AI for positive impact and ethical practices. We recognize the transformative potential of AI in addressing societal challenges, including combating scams and safeguarding online communities.

We’ve already started using AI for good, with products like Norton Genie – an AI-powered tool used to detect scams via text, email and social media. We’ll continue to learn and leverage the power and potential of AI responsibly to promote a safer and more inclusive digital environment for all.